|

Listen to this article

|

Foxglove now offers improved support for visualizing robot models described by the Unified Robot Description Format (URDF) in the 3D panel.

Foxglove now offers improved support for visualizing robot models described by the Unified Robot Description Format (URDF) in the 3D panel.

Automatically fetch URDF meshes over a Foxglove WebSocket connection

When using the Foxglove bridge (v0.7+) to connect to a live data source, Foxglove now automatically fetches any URDF meshes referenced by package:// URLs over the Foxglove WebSocket connection.

This means you no longer have to store mesh files on your local file system and load them with the Foxglove desktop app. You can now simply open a WebSocket connection from the Foxglove web app to fetch mesh files from a remote PC or an isolated environment like a Docker container.

Since mesh files can now be fetched from a remote source like a PC or Docker container, you no longer need to use the desktop app to set the ROS_PACKAGE_PATH and retrieve mesh files stored on your local file system.

Add URDFs via a URL, topic, or parameter

Previously, Foxglove only supported adding URDFs as custom layers to the 3D panel using URLs. Visualizing URDFs that were available via a topic or parameter other than /robot_description was not possible.

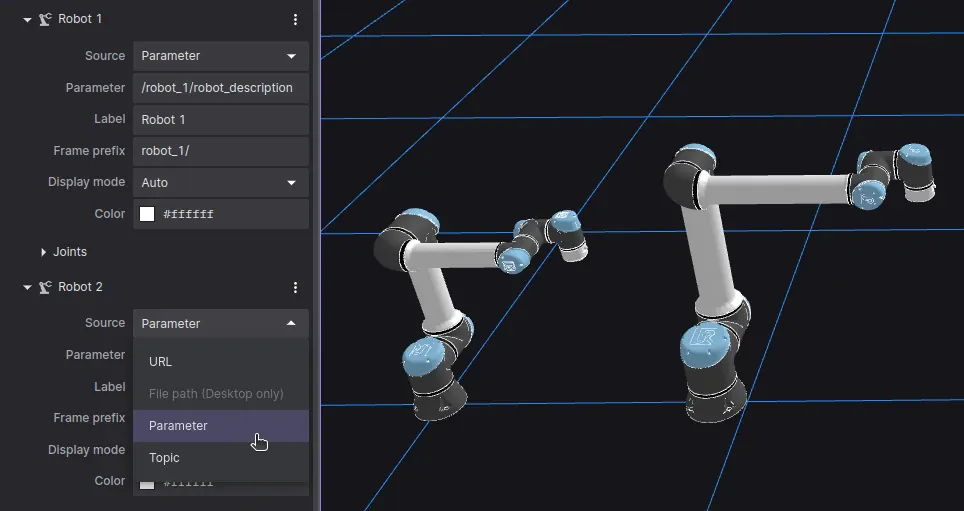

Now, you can now specify a custom “Topic” or “Parameter” as the URDF’s “Source”. You can even visualize multiple robots with the same URDF, but with transforms published on different frame prefixes, using the “Frame prefix” (i.e. tf_prefix) setting:

Visualize URDF collision geometries

The 3D panel’s “Display mode” setting allows you to switch between rendering a robot’s “Visual” or “Collision” geometries. Selecting “Auto” will show visual geometries by default, but will fall back to collision geometries if no visual geometries are available.

You can even configure the color of the collision geometries if it isn’t specified directly in the URDF with the new “Color” setting.

Stay tuned

With this expanded URDF support, visualizing your robot models in the context of a realistic 3D scene can be as simple as configuring a few settings. Whether you’re using Foxglove to monitor a live robot or triage an incident, the company hopes these improvements help you better understand how your robots sense, think, and act.

Tell Us What You Think!