The Sense One flash camera from Sense Photonics is a 3D, solid-state lidar that promises to help autonomous robots and vehicles see farther and more safely.

As autonomous mobile robots and vehicles spread from warehouses and factories to loading docks and streets, the need for safety and long-distance perception increases. The Sense One 3D solid-state lidar camera presented by Sense Photonics Inc. at MODEX 2020 last week is an example of improving sensor capabilities.

Erin Bishop, director of business development at Durham, N.C.-based Sense Photonics, discussed solid-state lidar and supply chain applications at MODEX in Atlanta. The company came out of stealth and released its Solid-State Flash LiDAR sensor last fall. Its 3D time-of-flight camera is intended to provide long-range sensing, can distinguish intensity, and works in sunlight.

The ability to detect intensity is important when perceiving things like forks on a forklift truck, which are often black, reflective, and close to the plane of a floor. Forklifts are involved in 65,000 accidents and 85 fatalities per year, according to the Occupational Safety and Health Administration.

From solar panels to flash lidar

The founders of Sense Photonics previously worked together at a solar power company, where they learned the core processes for manufacturing semiconductors. That prior experience in photonics and silicon is applicable to designing laser emitters on a curved substrate for wider field of view.

“I’ve been at robotics companies for 10 years — I was at Omron Adept,” recalled Erin Bishop, director of business development at Sense Photonics. “I’ve used [Microsoft’s] Kinect cameras for unloading trucks, but all these robots need better 3D cameras.”

“Intel’s RealSense is good for prototypes and indoors, but there are a number of different attributes that matter a lot,” she told Robotics Business Review. “Robots are especially useful for dull, dirty, and dangerous jobs outdoors, and you need mounted or mobile cameras that can withstand exposure to the elements.”

Bishop noted the challenge of seeing multiple rows of pallets or lifts at 50m (164 ft.) indoors. “Forklift forks are usually caught in the noise from the floor,” she said. “In addition, humans eyes don’t see 2D representations of 3D data very well. The conversation is starting abut 3D data output and training sets and labeling using RGB.”

One benefit of a custom emitter rather than Frequency Modulated Continuous Wave (FMCW) is that it avoids “sense crowding” at the 850nm wavelength, explained Bishop, who spoke at RoboBusiness 2019 and CES 2020. “We’re at 940nm, which works in sunlight and won’t interfere with other lidars,” she said.

“With high dynamic range or HDR, we get only 10% reflection in 100% sunlight,” she said. “We’re putting out a lot of photons, cutting through ambient light, and we can get a return from a dull black object like a tire or a mixed-case pallet and subframes.”

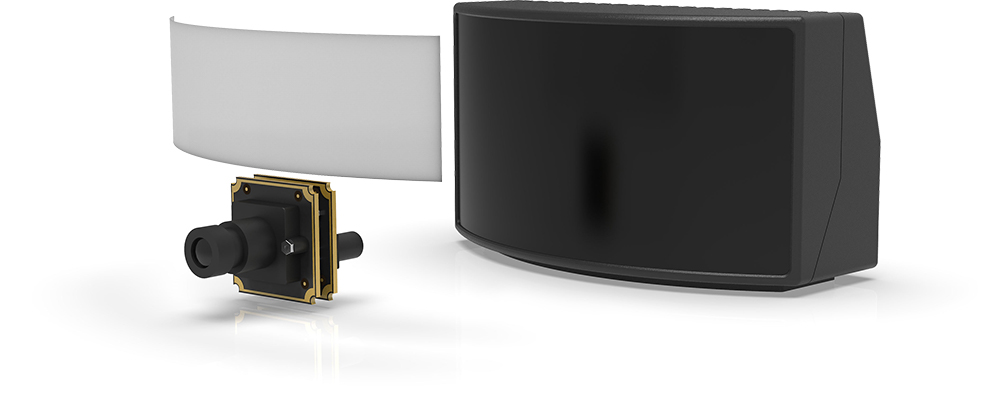

“By separating the laser from the receiver, it’s easier to integrate, and you have better EMI [electromagnetic interference] properties,” she claimed. “Sense One and Osprey offer indirect time-of-flight [ITOF] sensing.”

A modular lidar architecture allows for separation of the emitter from the receiver for use in vehicles. Source: Sense Photonics

From solid-state lidar to ‘cameras’

“The technology is mature, but it’s not 100% versatile,” Bishop observed. “The whole lidar industry is moving from ‘sensors‘ to ‘cameras‘ — ‘lidar’ is a bad word, and robotics companies aren’t interested. They prefer ‘long-range 3D camera.’”

A lidar intensity image of a forklift. Source: Sense Photonics

“The detector collects 100,000 pixels per frame on a CMS ITOF with intensity data. RGB camera fusion is really easy, and field-of-view overlay is promising,” she said. “The entire computer-vision industry can attach depth values at longer ranges to train machine learning.”

“When a human driver sees a dog, that may be efficient, but machines need to know what’s in the scene,” said Bishop. “When you generate an RGB image and have associated depth values across the field of view, computer-vision models become more robust. Our solid-state lidar provides more reliable uniform distance values at long range when synched with other sensors.”

Lidar plus RGB data for depth perception. Source: Sense Photonics

“The software stack then gets more reliable for annotation, and if a distance-imaging chip was in every serious camera for security, monitoring a street, or in a car or robot, it could help annotation for machine learning,” she added. Better data would also help piece-picking and mobile manipulation robots.

“Sense Photonics’ software uses peer-to-peer time sync for sensor fusion and robotic motion planning,” Bishop said. “With rotating lidars, you need to write a lot of code to understand images, as the robot and sensor are moving at the same time. Companies have told us they want low latency and lower power from solid-state systems.”

Industrial applications for solid-state lidar

More accurate and rugged solid-state lidar can be useful for warehouse, logistics, and other robotics use cases, especially in truck yards, Bishop said. At MODEX, Sense Photonics demonstrated industrial applications, including security cameras, video annotation, forklift collision avoidance, and supplemental obstacle avoidance.

By combining simultaneous localization and mapping (SLAM) with lidar data, fleet management systems could know about bottlenecks and manage all assets in a warehouse, Bishop said. “They should be warehouse operations systems,” she said. “The big retailers and consultants don’t know the difference between old-school and new-school robotics. Customers don’t know whom to believe when it comes to capabilites.”

“With interference mitigation, our sensors can see mixed-case pallets 3 meters away with 5-millimeter accuracy,” she said. “You can point multiple Sense One sensors at an object, like a pallet or cars passing each other. Most lidar companies don’t have an interference-mitigation strategy.”

Source: Sense Photonics

“Most mobile robotics people want a wide field of view at 15 to 20 meters,” Bishop said. “We’ll have a 95-by-75-degree, so you can do 180 degrees with two units. It and our Osprey product for automotive will be available soon.”

“Sense offers three different fields of view for a 40 meters range outdoors,” she added. “All you need to do is install cameras to the wire, mount them, and get their security certificates and IP address. For 50 meters indoors, you need only one or two units.”

“Because of its usefulness in sunlight, one agricultural company wants to put Sense Photonics’ LiDAR on a sod mower,” said Bishop. The Sense Solid-State Flash LiDAR is available for preorders now.

“Our intention is to build reasonably priced cameras for integration on consumer-grade advanced driver-assist systems, not expensive ones for experimental use,” she said. “Automotive manufacturers are figuring out which sensors they need and don’t need. They’re now optimizing for only the sensors they need and want to make them invisible.”